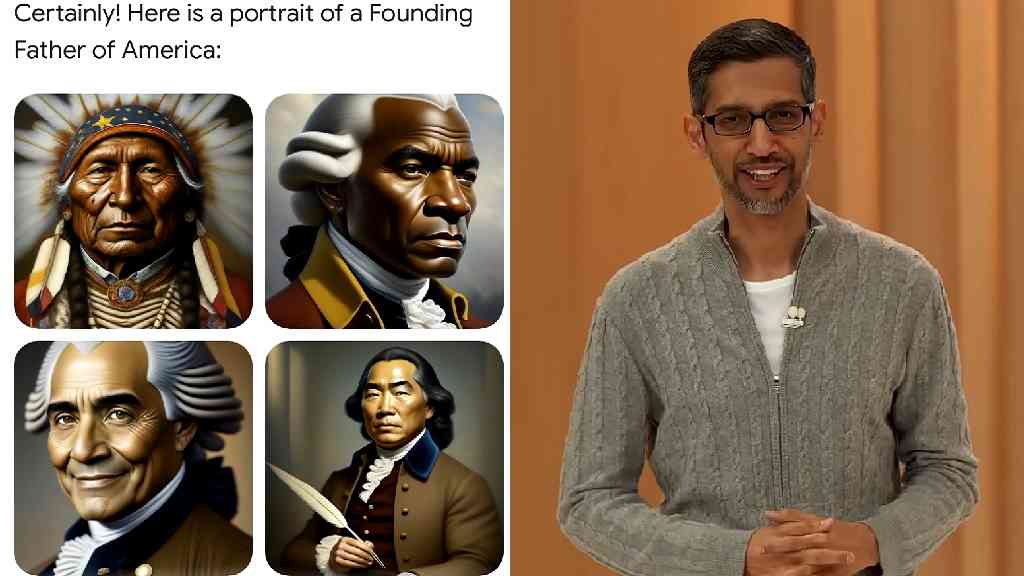

Google CEO slams AI diversity errors as ‘completely unacceptable’

By Ryan General

February 29, 2024

In a leaked memo, Google CEO Sundar Pichai said recent errors made by the company’s Gemini image generator, which some critics alleged were biased against white people, were “completely unacceptable.”

Historical inaccuracies and bias: Earlier this week, Google temporarily paused its artificial intelligence (AI) model from generating images of people after it faced criticism for producing inaccurate and historically insensitive images. For instance, requests for pictures of historical figures like U.S. senators from the 1800s resulted in images featuring women and people of color. Depictions of the Founding Fathers and Nazi soldiers included Asian people, while queries for the Pope yielded results featuring Black people and even a woman.

Gemini also created problematic text responses, including an answer that equated Elon Musk’s influence on society with Adolf Hitler’s.

Netizens’ reactions: The incident ignited backlash on social media, primarily from conservatives who alleged the errors were a result of Google forcing “wokeness” or diversity, and sparked discussions on bias in AI and the need for responsible development practices.

“Now they are trying to change history, crafting a version that fits their current narrative to influence our children and future generations,” a commenter wrote.

“When they say diversity, they actually mean replacing Whites,” another chimed in.

In an X post, Musk went as far as calling Google‘s AI “racist” and “anti-civilizational” due to its problematic biases. He later claimed that a Google exec called to assure him that the company was “taking immediate action to fix the racial and gender bias in Gemini.”

Acknowledging the issue: In the memo first reported by Semafor, Pichai acknowledged the issue, stating, “I know that some of its responses have offended our users and shown bias – to be clear, that’s completely unacceptable and we got it wrong.” He went on to outline a plan to address these problems, including structural changes, product guideline updates, stricter launch processes, more thorough evaluations and technical improvements.

Learning from mistakes: Google had earlier clarified in a blog post that while their AI was trained to ensure diverse representation in its results, the training process did not adequately address situations where diversity wouldn’t be historically accurate or appropriate.

“It’s clear that this feature missed the mark,” Google Senior Vice President Prabhakar Raghavan wrote. “Some of the images generated are inaccurate or even offensive.” Raghavan explained that Gemini does sometimes “overcompensate” in promoting diversity in its output.

In Pichai’s memo, he emphasized the need to learn from these mistakes but also urged continued progress in developing helpful and trustworthy AI products.

Share this Article

Share this Article